Knowledge graphs are being used in the field of machine learning for various applications including question & answering, link prediction, fact checking, entity disambiguation etc. For many of these applications finding the missing relationships in the graph is important to ensure, completeness, correctness and quality. This involves the task of entity prediction and relationship prediction. Basically a knowledge graph is a collection of entities and relationships between them in the form of RDF style triples (h, r, t) where h represents a head entity, t being the tail entity and r the relationship between the head and the tail entity.

Entity prediction is, given a h, r of a triple predict t and relationship prediction is, given h, t predict r the type of relationship between them. In this post I will discuss a preliminary method for entity prediction task known as TransE [1], an energy based model that learns continuous, low-dimensional embedding of entities and relationships by minimising a margin-based pair-wise ranking loss.

The idea behind TransE is, the relationship r is modelled as a translation between the head and the tail entity in the same low-dimensional plane. Therefore if a relationship (h, r, t) exists in the knowledge graph, the learnt embedding of (h+r) should be closer to the embedding of t meaning (h+r) ≈ t. If there is a negative or false triple such as (h’, r, t’) then the distance between the embedding of (h’+r) and t’ should be larger. The energy function E(h, r, t) is defined to be the L1 or L2 distance between the h+r (translated head entity) and t (tail entity). Now lets see how such embeddings can be learnt from a training set S containing a set of positive (h, r, t) triples.

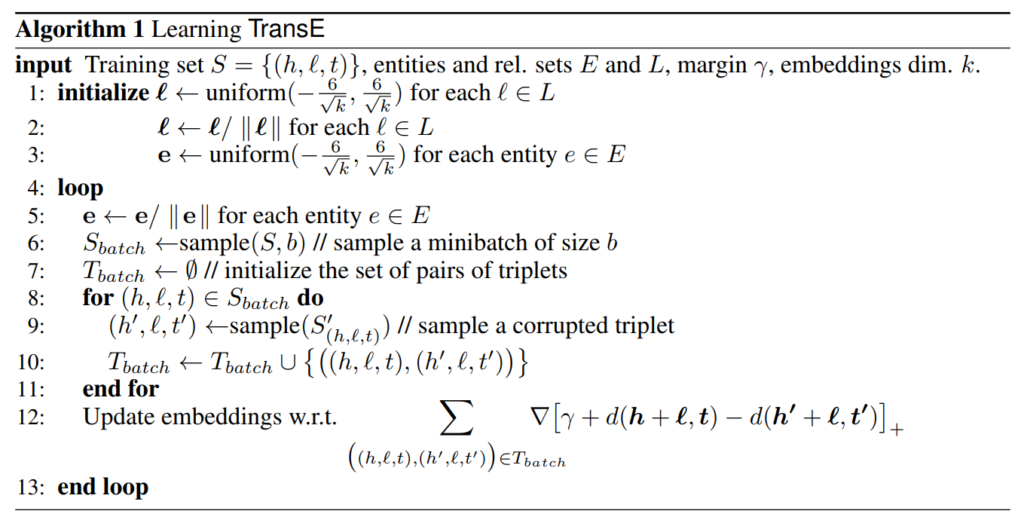

Lets say the entity embedding and relationship embedding to be learnt is e and l, full list of entities E and relationships L, the training set S ∈ {(h, r, t)} containing all positive triples, the first step is to initialise e and l with uniform embedding vectors for each entity in E and each relationship in L. Before we can go in to the training phase we also need negative samples in order to optimise a pair-wise ranking loss function. This is done by corrupting positive triples by either removing the head or tail and replacing it with a random entity from E. We call this the corrupted triples set S’ ∈ {(h’, r, t’)}. Optimisation happens in mini batches. Therefore a mini-batch Sb is sampled from S of batch size b and for each positive triple in Sb, a negative triple is sampled from the corrupted triples set S’ . Optimisation happens now using stochastic gradient descent for each positive and negative triple pair in the mini-batch set, minimising a margin based ranking loss function given by,

![]()

over the possible h, r, t embeddings . At each gradient step towards the minimum, the embeddings are updated with constant learning rate. The pseudocode for the algorithm is given below.

Training happens over 1000 epochs and model hyper-parameters such as learning rate α, margin γ, distance measure d and embedding dimension k are tuned on a validation set based on performance with early stopping. For testing, knowledge graphs extracted from Freebase and DBpedia have been used.

So far we have seen the basics of TransE which learns a knowledge graph embedding used to predict links. Further other models were proposed improving TransE on how the embedding planes are computed such as TransR, TransA, TransH. Instead of using 1-step relations (h, r, t), 2 step or more relations (h, r1, r2, t) were used in the PTransE and RTransE model. Other related models include SE, HolE, Knowledge Vault, NTN, CVSM and many more. Recently another model has been proposed known as ProjE which we would explore in future posts.

References:

[1] Bordes, A., Usunier, N., Garcia-Duran, A., Weston, J., & Yakhnenko, O. (2013). Translating embeddings for modeling multi-relational data. In Advances in neural information processing systems (pp. 2787–2795).