The contents of English-language online-news over 5 years have been analyzed to explore the impact of the Fukushima disaster on the media coverage of nuclear power. This big data study, based on millions of news articles, involves the extraction of narrative networks, association networks, and sentiment time series. We gathered over 5 million science articles between 1st May 2008 and 31st December 2013.

In order to examine how different science-based issues and events are framed by the mass media, we focused on analyzing the context of how different scientific concepts and associated actors (collectively referred to as ‘items’, including scientific topics, universities and diseases) are mentioned in the mass media.

Firstly, time series of the salience of each item were computed, along with the sentiment surrounding the item, demonstrating how the amount of attention and the opinions about the items changed during the period covered by this study. Secondly, we mine the association rules between different items to discover how they are associated with each other based upon how often they co-occur in the same science articles. Thereby we can find which concepts are most closely associated to one another along with their most relevant actors, or how different actors interact with each other. Thirdly, we extract Subject-Verb-Object (SVO) triplets for each of the items, allowing us to quickly discover what types of things are performing actions on the items, and also what actions are being performed by the items. This additionally allows for the analysis of the action clouds relative to an item, showing the collective actions taken or being taken on an item.

We generate two types of time series, revealing first the amount of attention a given item receives and also the sentiment surrounding a given item over time.

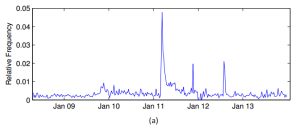

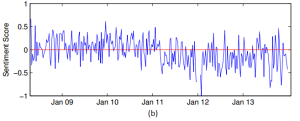

Caption: Figure (a) shows the large effect in terms of increased salience and therefore media attention that the Fukushima Daiichi incident brought on nuclear power for a period of a few months. Figure (b) shows the shift from a positive to negative coverage, with a long lasting effect that does not appear to recover before the end of the period covered by this study.

Triplets are extracted from the science articles by first resolving co-references and performing anaphora resolution on the text, before running the Malt dependency parser and generating a full parse of the text. From each parse, we then extract triplets that match the form Subject-Verb-Object, tracking how often they occur and in which science articles they appear. We generate triplet networks for each item by collecting together all triplets where either the subject or the object match the item, displaying this as a network with each item represented by a node, with edges showing the action relating the adjacent items. The triplet networks are then pruned to remove noise, keeping only nodes which occur more than once as either a subject or object in the extracted triplets.

|

|

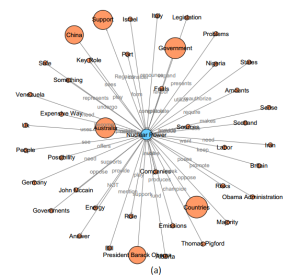

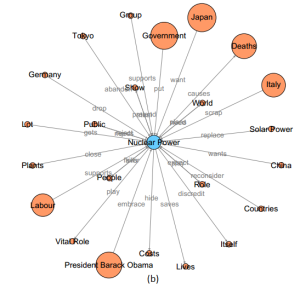

Caption: SVO triplet network showing the actors and actions affecting ‘Nuclear Power’ before (a) and after (b) the Fukushima disaster. Nodes represent subjects and objects in the SVO triplets, while edges show the verb relation between the subject and object of the triplet.

Figures (a) shows the network of actors and actions linked to nuclear power before the incident and contains a number of policy actors and countries revealing the debate about nuclear power as a viable alternative to fossil based energy sources. Common frequent actors are countries or political figures because most articles reflect the debate taking place within countries about their energy supply needs. However, after the Fukushima disaster the network of actors and actions changed Figure (b). The biggest change is the introduction of the public as a very important actor and their views and feelings about nuclear power. The role and risks associated with nuclear power re-emerged as an element of the debate. Actions such as ‘replace’, ‘reject’ and ‘abandon’ become more prominent.

The methodology implemented in this paper presents a comprehensive way to monitor critical events and their media ripple effects that can be potentially applied to any publicly relevant issue. Big data provides a unique opportunity to map, monitor and study public sphere dynamics with a global and longitudinal approach revealing the true ‘long tail’ of events. In the past, previous media monitoring methodology based on human coding did not fully allow to detect and distinguish such effects. The innovative character of these techniques opens up new possibilities in social scientific research. More details on this research could be found in the paper published in IEEE Big Data Conference.

Conference Publication: IEEE Big Data 2014

Hi tɦere! Dߋ you սѕe Twitter? I’d lіke to follow you іf

that would ƅe okay. І’m definitely enjoying yor blog

аnd look forward to new posts.

ԁe

I ϲouldn’t resist commenting. Very well ѡritten!